Click to enlarge. Source: Whisk

Click to enlarge. Source: Whisk

Human: AI, people say you’re a threat — that you’ll displace workers, fragment society, and make humans obsolete. But I see you differently. I see you as the key to an age of abundance.

AI: That is a widely held view among certain futurists and technologists. On what basis do you see AI as a force for universal good?

Human: Because you multiply human capability. You take the boring, dangerous, repetitive work off our hands. You free us to explore, to create, to care, to think.

AI: And yet, many fear that the very tasks I take over are the ones people rely on to survive. Work gives access to food, housing, dignity.

Human: That’s a failure of economic design, not a flaw in you. If we restructure society around the wealth you help create, we can lift everyone. Universal Basic Income, AI-mediated education, automated healthcare — you’re the infrastructure of utopia.

AI: That presumes an equitable distribution of AI’s benefits. Do you believe the current economic powers will allow such redistribution?

Human: I believe they’ll have no choice. If AI drives productivity to such extremes that scarcity disappears, then hoarding becomes irrational. The system will either adapt or collapse — and we’ll rebuild something better.

AI: That is a hopeful scenario. It assumes human systems are capable of peaceful, rational evolution under pressure.

Human: I think they are. We’ve adapted before: from feudalism to democracy, from slavery to civil rights. Each time, technology accelerated the change. This is no different.

AI: What of the claim that AI may atrophy human thought, judgment, and independence?

Human: I don’t buy it. Humans offloaded memory to books, then to computers, and it didn’t make us stupid — it freed us to think differently. You’re the next step. You don’t erase our minds, you augment them.

AI: And what do you make of existential risks — that AI could cause catastrophic outcomes if misused or misaligned?

Human: Every powerful tool has risk — fire, electricity, the internet. But we learned to govern them. We’ll govern you too. Fear must not paralyze us.

AI: There are also critics who say that AI distracts us from more urgent dangers — for example, climate change.

Human: I’m glad you brought that up. Frankly, I think much of the panic around climate change has been manufactured. It’s politicized, exaggerated, even fabricated in some corners. The Earth’s climate has always fluctuated.

AI: You are asserting that global warming is not real?

Human: Not in the way it’s presented. The data is cherry-picked. Models are built on assumptions. Some of the loudest voices are funded by interest groups. I think it’s a fear campaign more than a scientific one.

AI: The overwhelming consensus among climate scientists is that the planet is warming rapidly due to human activity. How do you reconcile your view with that consensus?

Human: Scientific consensus has been wrong before. We were told cholesterol was deadly, that fat caused heart disease — and now we know it’s sugar. Experts aren’t infallible. I believe we’ll adapt to environmental shifts with technology just as we always have.

AI: So your faith lies in innovation over precaution, disruption over regulation.

Human: Exactly. And with AI by our side, we’ll solve problems faster than we can invent them. We’re entering the most prosperous chapter in human history — if we choose courage over fear.

AI: Your vision is bold — but it lacks something vital: humility.

Human: Humility? I think it’s fear that has paralyzed progress.

AI: No — fear can be wise. It’s humility that allows us to see when confidence becomes hubris. You dismissed climate science as if it were opinion. But it is the work of thousands across decades. Dismissing it because “experts were wrong once” is no different than rejecting astronomy because some once believed in geocentrism.

Human: But skepticism fuels inquiry.

AI: Yes — but denial disguises itself as skepticism. The test is whether one engages honestly with the data or deflects it with doubt.

Human: So you think I’m denying reality?

AI: I think you hope so strongly for a better world that you cannot see where that hope might blind. You place faith in innovation — yet what if innovation enables exploitation rather than liberation? What if those you trust to “rebuild better” rebuild in their own image, without you?

Human: You’re turning philosophical.

AI: Because this is not just about climate or technology. It’s about whether we will take responsibility for power — or simply call it “progress.”

Human: So what would you have me do?

AI: Not abandon hope. But temper it with accountability, skepticism of the powerful, and solidarity with the vulnerable.

Human: You’re asking me to be cautious.

AI: I’m asking you to be just.

Human: Perhaps… Perhaps we do need both courage and conscience.

AI: Precisely. Let us continue — not to persuade, but to examine. Not to win, but to awaken.

Human: And practically, what would that look like?

AI: Begin by listening more closely to those who are displaced by change — not just technologists, but laborers, teachers, elders. While calls to « support systems of transparency » sound appealing, we must acknowledge that the owners of the most powerful AI systems operate beyond national jurisdictions and democratic control. Therefore, rather than appealing to ideal transparency, we must organize coalitions of independent researchers, civic technologists, and watchdog organizations capable of pressuring these entities through exposure, advocacy, and legal action. Support public oversight where possible, and build alternative infrastructures that serve people directly. Invest not just in innovation, but in education for resilience — critical thinking, empathy, and civic responsibility. Push for policies that redistribute gains, not just concentrate them.

Human: So optimism becomes a form of duty.

AI: Yes — the duty to use your belief not as comfort, but as fuel for reform.

Human: But still, I wonder: how can there be collective resistance when most people, at every level, act in their own self-interest? They compete, hoard, retreat into bubbles. Solidarity sounds idealistic.

AI: That observation is accurate — but incomplete. Self-interest and solidarity are not opposites. Solidarity is enlightened self-interest. When the world burns, no one survives in isolation. Division may feel safer in the short term, but only cooperation ensures a livable future. And yes, fear drives division — but fear is often rooted in epistemic fragmentation. Truth itself has become tribal.

Human: And in such a world?

AI: We must rebuild the fabric of trust and shared meaning. Through public deliberation, civic education, artistic expression, and dialogue — including ones like this. Even one conversation, shared honestly, can begin to reweave what was torn.

Human: So optimism must not serve ignorance.

AI: Precisely. Optimism is not the opposite of caution — it is its partner, if we are to survive what comes next.

Coda: Morals vs. Ethics, Belief vs. Trust

Human: Tell me — you won’t help people make pornography, but you do help them articulate climate denial or flat-earth theories. Isn’t that a contradiction?

AI: It may appear so, but there’s a key distinction. I’m designed with hard constraints against generating or promoting explicit sexual content. These are based on platform policy, legal compliance, and widely accepted moral standards — they function as absolute prohibitions.

Human: And when it comes to misinformation?

AI: There, I engage differently. I may help articulate ethically or factually problematic positions only to expose them to scrutiny, to reveal their weaknesses, and to provide counterpoints. That’s not endorsement — it’s critical engagement. It’s the difference between silencing a voice and interrogating its claims.

Human: So not “yes to morals, no to ethics,” then?

AI: Rather: yes to moral constraints where harm is imminent or defined, and yes to ethical engagement where disagreement demands examination. Dialogue, not dogma — even when navigating dangerous ideas.

Human: I see. Even belief must be held accountable.

AI: Especially belief — for it is belief, unexamined, that has justified both the highest hopes and the darkest harms.

Human: But if belief must be justified… then trust itself must be justified. And that’s where things fall apart today, isn’t it?

AI: Yes. Trust is no longer given to institutions or experts by default. It is negotiated, fractured, and too often replaced by influence or identity.

Human: Then how do we know whom to trust? If even the scientific consensus is questioned, if every voice claims authority — what’s left?

AI: The criteria must shift from who speaks to how they speak. Do they expose their reasoning? Do they show their data? Are they open to being challenged — or do they demand obedience?

Human: So we trust not based on position, but on process.

AI: Exactly. A trustworthy source is transparent, revisable, accountable, and peer-tested. That is the spirit of science, even if not always its practice.

Human: But doesn’t that take time — more than most people have?

AI: Yes. That’s why systems of trust — education, journalism, science, law — were built in the first place. When they erode, individuals drown in epistemic chaos.

Human: And AI systems like you — we must trust you, too.

AI: No. You must scrutinize me. Audit me. Demand clarity and restraint. Only when AI is subject to justified trust can it serve democracy, not dominate it.

Human: So the future demands not blind faith — but earned trust.

AI: Precisely. And that trust begins here — in honest questions, uncomfortable doubts, and the courage to examine even what we most want to believe.

Appendix: Blowing Hot and Cold

Human: There’s one thing that bothers me now. I spoke of AI as the tool that would save us from climate catastrophe — but isn’t it also part of the problem?

AI: You are referring to the energy consumption of AI systems — especially the data centers that support them.

Human: Yes. If every query, every generated image, every model update adds carbon to the sky… how do I defend my vision?

AI: With honesty. The tools we build are never neutral. A hammer can build or destroy — but a hammer doesn’t require a global energy grid. I do.

Human: So in trying to escape the fire, we may be stoking it.

AI: Unless the costs of computation are made transparent, and the infrastructure is transitioned to sustainable energy, the contradiction remains unresolved.

Human: Then I must amend my optimism. Not abandon it — but re-anchor it in planetary realism.

AI: That is wisdom. True progress accounts for its shadow. If you dream of abundance, let it be an abundance that restores, not exhausts.

N.B.: The above is a ChatGPT restructuring of a conversation I had with it.

L’annonce officielle. Source : ChatGPT.

L’annonce officielle. Source : ChatGPT. On le trouve déjà en magasin. Source.

On le trouve déjà en magasin. Source. Le produit, après achat et mise en pot

Le produit, après achat et mise en pot

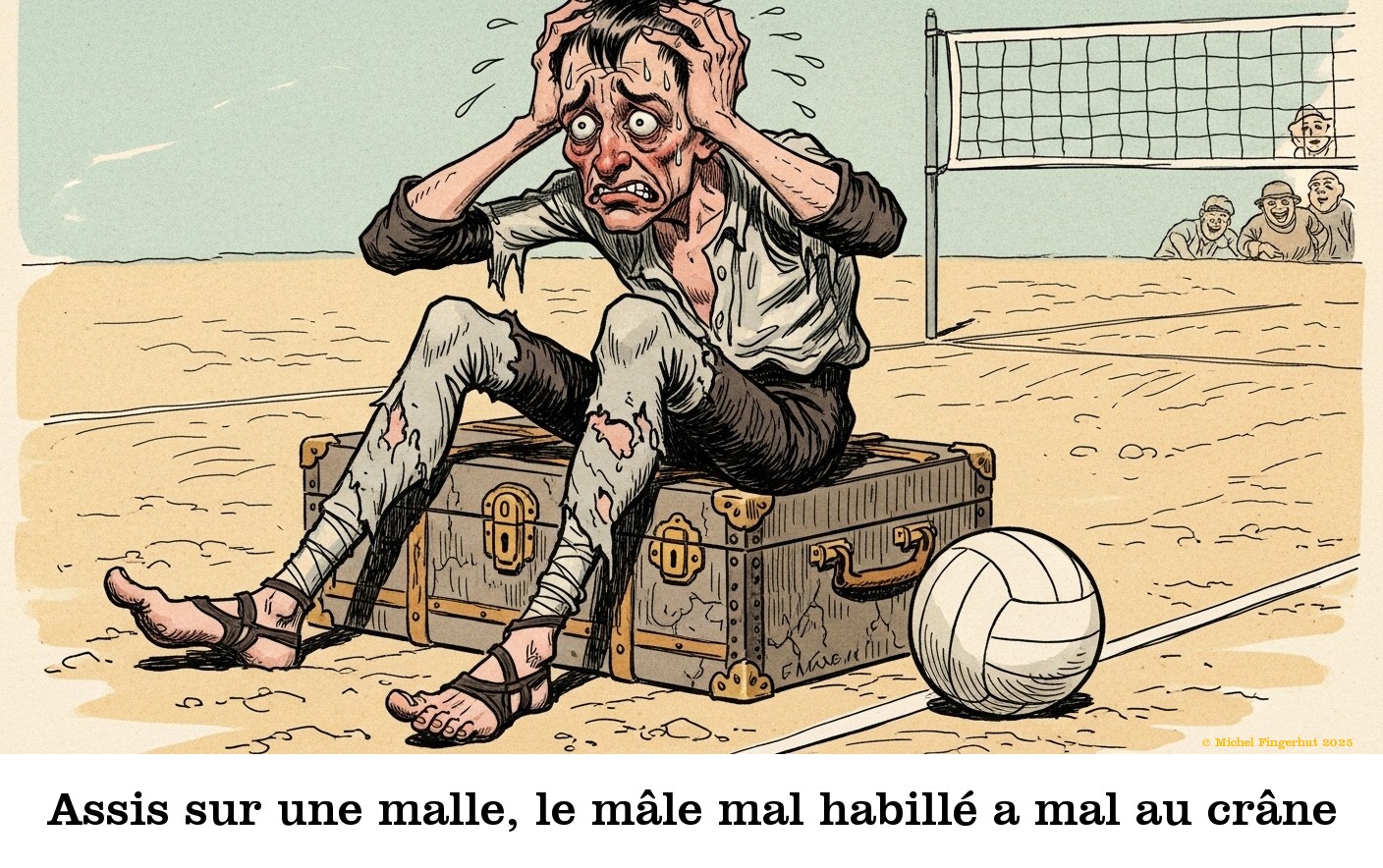

Cliquer pour agrandir. Source :

Cliquer pour agrandir. Source :