Dialogue V with ChatGPT. Will ChatGPT be able to read lips, minds?

(source).

(source).

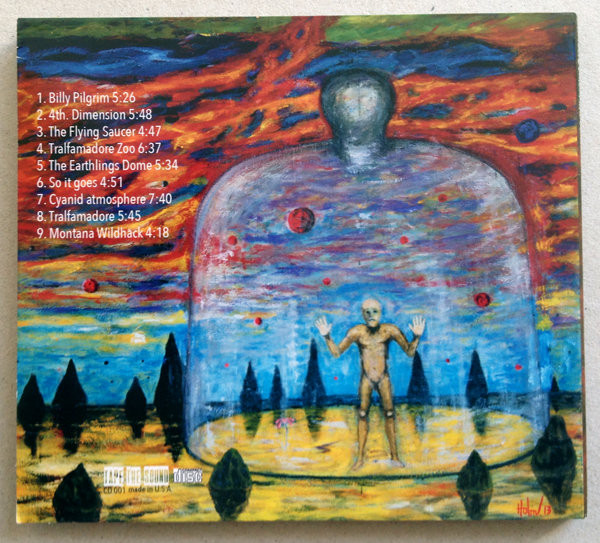

Please tell me how’s life on Tralfamadore these days.

I’m sorry, but Tralfamadore is a fictional planet created by author Kurt Vonnegut in his novel “Slaughterhouse-Five.” It does not exist in reality, so I cannot provide you with information on how life is on Tralfamadore. However, if you have any other questions or topics you would like to discuss, I’m happy to try my best to assist you.

Can’t you tell me fictitiously how’s life there?

Certainly! In the fictional world of Kurt Vonnegut’s “Slaughterhouse-Five,” Tralfamadore is a planet inhabited by the Tralfamadorians, an alien race that perceives time and space differently from humans. Tralfamadorians are described as being shaped like toilet plungers, with one eye on the top of their bodies and one on the bottom.

According to Vonnegut, Tralfamadorians do not experience time in a linear fashion like humans do. They see all of time simultaneously, and they believe that all moments in time exist forever. To the Tralfamadorians, death is not an end, but simply a different moment in time.

The Tralfamadorians are also said to be peaceful and advanced beings who possess technology that allows them to travel through time and space. They are described as being knowledgeable about the universe and able to communicate telepathically.

So, in short, life on Tralfamadore would be quite different from life on Earth, with a completely different way of experiencing time and space. The Tralfamadorians would have a unique perspective on life, death, and the universe, and their technology and culture would likely be far more advanced than our own.

How do they communicate with each other?

According to Kurt Vonnegut’s novel “Slaughterhouse-Five,” the Tralfamadorians communicate with each other through telepathy. They have the ability to transmit their thoughts and ideas directly to one another, without the need for spoken language or physical gestures.

This ability to communicate telepathically is described as being one of the many advanced technological and biological abilities possessed by the Tralfamadorians. In the book, the protagonist Billy Pilgrim is taken to Tralfamadore and is able to communicate with the aliens through this telepathic method.

It’s worth noting that as a fictional creation, the specifics of how the Tralfamadorians communicate may vary depending on the interpretation of different readers or adaptations of the story. However, in the original book, telepathy is the primary means of communication among the Tralfamadorians.

So if there is telepathy, no need for online chat systems?

That’s correct! If the Tralfamadorians possess the ability to communicate telepathically, they would not have a need for online chat systems or any other form of written or spoken language to convey their messages to one another.

Telepathy is a form of direct communication that would allow the Tralfamadorians to share their thoughts, ideas, and emotions with each other in a way that is much faster and more efficient than any other form of communication we know of.

It’s important to note, however, that telepathy is a fictional concept, and it has not been scientifically proven to exist in the real world. While some people believe in the possibility of telepathy or other forms of psychic abilities, there is currently no scientific evidence to support these claims.

So if Tralfamadore existed, you wouldn’t be able to be of use there?

As an artificial intelligence language model, I am designed to process and respond to natural language queries and generate human-like responses based on my training data.

If Tralfamadore existed and its inhabitants communicated primarily through telepathy, my natural language processing capabilities would not be applicable in that context. I would not be able to communicate with the Tralfamadorians through telepathy, and they would not have a use for a language model like myself.

However, if there were other beings on Tralfamadore who communicated using a spoken or written language similar to our own, then it’s possible that I could be of some use in that context. But again, this is purely speculative, as Tralfamadore is a fictional planet created by the author Kurt Vonnegut and does not exist in reality.

Can’t you be trained to communicate by telephaty?

As an artificial intelligence language model, I do not possess any physical or biological components that would allow me to communicate through telepathy. Telepathy is a hypothetical ability that some believe may exist in certain species, but it has not been scientifically proven or replicated in a lab setting.

Furthermore, even if telepathy did exist and could be studied and replicated, it is currently unknown how it works or what biological or physical mechanisms are involved. Without a clear understanding of telepathy, it is not possible to train an artificial intelligence like myself to communicate through this means.

So, while I can assist with processing and responding to natural language queries, my abilities are limited to the realm of language processing and communication as we currently understand it.

Can you be fed by machine which detect and analyse the alpha brain waves?

It is technically possible to use alpha brain waves as a control mechanism for certain types of machines, such as those used in biofeedback or neurofeedback therapies. However, as an artificial intelligence language model, I do not have the ability to receive or interpret brain waves.

My functionality is based on processing and analyzing natural language input, such as text or voice commands, and generating responses based on that input. I do not have the capability to interact with or respond to other types of input, such as alpha brain waves or other forms of biometric data.

In short, while it is possible to use alpha brain waves to control certain types of machines, this technology is not applicable to my current functionality as a language model.

If you had a video capture, could you understand sign language?

As a language model, I am programmed to process and analyze natural language input in the form of text or voice. While I do not have the ability to see or interpret video, it is technically possible to use computer vision and machine learning algorithms to analyze sign language gestures and translate them into text or speech.

There are already some technologies and applications that can perform this task to varying degrees of accuracy. For example, there are computer vision algorithms that can track the movements of a person’s hands and fingers as they perform sign language gestures and translate those movements into text or speech.

However, it’s worth noting that sign language is a complex and nuanced form of communication, and there are many variations and dialects of sign language used around the world. Some sign language gestures can have multiple meanings depending on the context in which they are used, and some signs can have different meanings in different sign language dialects.

So, while it is technically possible to use computer vision and machine learning to interpret sign language, there are many challenges involved in creating accurate and reliable systems for this task.

Vocal language is also a complex and nuanced form of communication, and there are many variations and dialects of vocal language used around the world. Also, intonation plays a role in adding a layer of meaning.

You are absolutely right! Vocal language is also a complex and nuanced form of communication, and there are many variations and dialects of vocal language used around the world. Intonation, accent, and other subtle vocal cues can also add layers of meaning and context to spoken language that can be difficult to convey through written text.

As a language model, I am designed to process and analyze natural language input in all its forms, including written text, spoken language, and even some types of nonverbal communication such as facial expressions and gestures. However, accurately interpreting and conveying the nuances and subtleties of vocal language can be challenging, especially when dealing with dialects or accents that are unfamiliar to the model.

Researchers and developers are continually working to improve the accuracy and reliability of language models like myself, and there are many ongoing efforts to develop models that can better understand and interpret the nuances of vocal language. Nevertheless, there is still much work to be done in this area, and accurately interpreting and conveying the full range of meaning and context in spoken language remains a challenging task for language models and other AI technologies.

Are you already trained – even just experimentally – to analyse and understand data captured by video?

As a language model, I do not have the capability to analyze or understand data captured by video or other types of sensors directly. My functionality is based on processing and analyzing natural language input in the form of text or voice.

However, there are other types of artificial intelligence technologies that can analyze and interpret data captured by video, such as computer vision and image recognition algorithms. These technologies use machine learning and other techniques to identify patterns and features in images and video, and can be used for a wide range of applications, from object recognition to facial recognition to detecting anomalies or changes in a scene.

There are also some language models that have been trained using data that includes video or images as input. These models can analyze the video or image data to extract information or features that are relevant to the language processing task, such as identifying objects or actions in a scene. However, this is still an area of active research, and the accuracy and effectiveness of these models can vary depending on the specific application and the quality of the input data.

Put it differently: are there plans to extend your functionalities in order to include processing ana analyzing natural language input in the form of video?

As far as I am aware, there are ongoing research and development efforts aimed at extending the capabilities of language models like myself to include the processing and analysis of natural language input in the form of video. This is an area of active research, and there are many potential applications for this technology, such as automatically generating captions or subtitles for videos, analyzing video content for sentiment or emotional tone, or even interpreting sign language or other forms of nonverbal communication.

However, it’s worth noting that developing language models with these capabilities is a complex and challenging task that requires expertise in both natural language processing and computer vision. There are many technical and logistical challenges involved in analyzing and interpreting natural language input in the form of video, such as dealing with variations in lighting, camera angles, and image quality, as well as accounting for the temporal aspects of video data.

Nevertheless, there is a lot of interest and investment in this area, and I expect that we will see continued progress in developing language models and other AI technologies that can analyze and interpret natural language input in the form of video in the coming years.

So, if you were trained to capture language through moving images, you’d be able to read what people would say behind a thick glass?

If I were trained to analyze and interpret natural language input in the form of video, I would be able to capture and analyze the language being used in the video, regardless of whether the speakers were behind a thick glass or not.

However, it’s worth noting that analyzing natural language input in the form of video is a complex and challenging task that requires sophisticated AI algorithms and processing capabilities. The quality of the video data, including factors such as lighting, camera angles, and image quality, can also affect the accuracy and reliability of the language analysis.

Furthermore, it’s important to recognize that analyzing video data for language is just one aspect of a broader range of natural language processing tasks. Other tasks, such as sentiment analysis or summarization, may require different techniques or data sources in order to be performed accurately.

In any case, the ability to analyze natural language input in the form of video could potentially be useful in a wide range of contexts, from analyzing video recordings of meetings or interviews to automatically generating captions or subtitles for videos.

And also to spy on people who think noone actually hears their voice.

It’s important to note that any technology, including language processing capabilities for video, should be used ethically and responsibly, and within legal and regulatory frameworks. The use of such technology for spying or other unethical purposes would be highly problematic and could have serious consequences.

In addition, the development and use of AI technologies are subject to various ethical considerations, such as transparency, accountability, and fairness. Researchers and developers working in this area are increasingly focused on developing AI systems that are transparent and explainable, and that can be audited and held accountable for their decisions and actions.

Furthermore, it’s important to recognize that the development of AI technologies, including those with language processing capabilities for video, is a complex and multi-disciplinary field that involves many stakeholders, including researchers, policymakers, industry leaders, and civil society organizations. The ethical and societal implications of these technologies are an important topic of discussion and debate, and it’s important that all stakeholders engage in constructive dialogue and work together to develop frameworks and guidelines that promote the responsible and ethical development and use of AI.

It is indeed important, but who can inforce it in reality? Once the technology is out, anyone can use it for one’s own purposes, be they ethical or not.

You are correct that once a technology is developed and widely available, it can be difficult to control its use and prevent unethical or harmful applications. However, there are various mechanisms and approaches that can be used to mitigate these risks and promote responsible and ethical use of AI technologies.

One approach is to establish legal and regulatory frameworks that govern the development and use of AI technologies. Governments and international organizations are increasingly recognizing the need for such frameworks and are taking steps to develop and implement them. For example, the European Union’s General Data Protection Regulation (GDPR) includes provisions related to the ethical use of AI, such as the requirement for transparency and accountability in automated decision-making systems.

Another approach is to encourage ethical and responsible behavior among developers and users of AI technologies. Many companies and organizations are adopting ethical guidelines and codes of conduct for AI development and use, and there are various initiatives and organizations that promote best practices and ethical standards in AI.

Ultimately, ensuring the responsible and ethical development and use of AI technologies is a collective responsibility that requires engagement and collaboration among all stakeholders, including researchers, developers, policymakers, and civil society organizations. It’s important to continue the discussion and dialogue around ethical and societal implications of AI, and to work together to develop frameworks and guidelines that promote responsible and ethical development and use of these technologies.

Now I am sure you’re aware that Elon Musk and many scientists have asked to “pause” the research in chat gpts?

Yes, I am aware that there has been discussion and debate among some scientists and experts in the AI community about the potential risks and ethical implications of large language models, including chatbots like myself.

Some experts, including Elon Musk, have called for a pause or moratorium on the development of these models until the risks and ethical implications can be better understood and addressed. The concerns raised include the potential for these models to spread misinformation, amplify biases, or be used for harmful purposes such as impersonation or manipulation.

Other experts, however, argue that the benefits of large language models, such as their potential to advance natural language processing and support human creativity and innovation, outweigh the risks, and that the development of these models should continue with appropriate safeguards and ethical considerations.

It’s important to recognize that the development of AI technologies, including large language models, is a complex and multi-disciplinary field that involves many stakeholders and perspectives. There is no one-size-fits-all solution or approach, and it’s important to engage in constructive dialogue and collaboration to ensure the responsible and ethical development and use of AI technologies.

Regenerate response

_________________

[La liste des précédents dialogues avec ChatGPT d’OpenAI et avec Bing se trouve ici || The list of all previous dialogues with ChatGPT and Bing can be found here.]